Networking is fun. It should also be pragmatic. The goal should be to get traffic from Point A to Point B as efficiently and securely as possible.

There are many networks in production that have been architected like a service provider network, or how networking companies want them designed. This is not to say that these networks aren't providing service, as they all are, just not likely with the scaling requirements of an ISP. These designs are likely implemented by people who love networking and just want to see as much of it as possible, at the expense of being impractical and expensive.

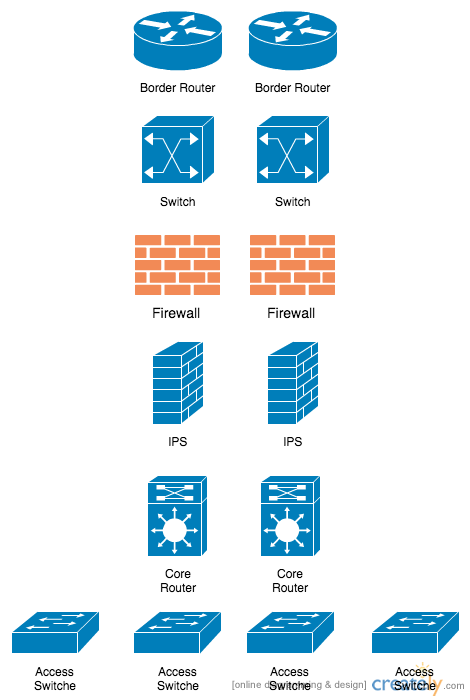

Here’s an example of what I’ve described:

The architecture above contains:

- Border Routers that connect to the Internet and are the first hop for the IPs provided by the ISP

- Core Routers or Switches that handle routing between internal networks

- Distribution Routers or Switches that aggregate Access Switches. They will either pass traffic between locally connected access switches or forward traffic to the core to be routed

- Access Switches that provide physical Ethernet connectivity for endpoints (clients and servers)

- Security Gateways that may include multiple layers of firewalls, Network IPS, Web Gateways, and Email Gateways

There are usually a few other erroneous network elements; routers to connect to partner networks, proxy servers, VPN concentrators, and legacy environments that people are reluctant to make changes to because they have been in place for years.

While highly scalable, for most enterprises (outside of ISPs) an environment like this is too much; like putting out a match with a fire hose.

Due to its complexity, this architecture would have a high capex and operational cost, as well as many potential points of failure. The most serious problem with this design though is its blind spots and lack of visibility. Specifically, because much of the internal traffic would travel from an endpoint, to a switch, then to another switch without hitting the security gateways it cannot be scrutinized. This is troubling from a security perspective as it’s estimated that during most network breaches the attacker makes six lateral movements once inside the network environment. The attacker makes these lateral moves in order to find the data they want, and then find an exit point. This lateral movement needs to be accounted for in any network security policy.

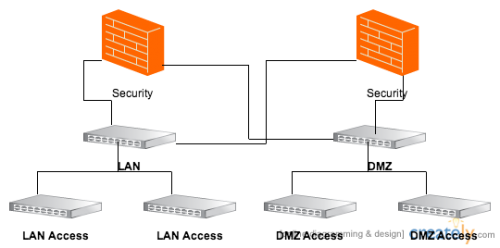

For better management and security, much of the functionality presented above should be collapsed into much fewer layers.

- Firewalls

- Access/Distribution Switches

In this architecture, the Internet connection would terminate at security gateways. In order to ensure a high-availability Internet connection, it is recommended that the firewalls each have two cable connections to the ISP (two connections to different ISPs is also possible). If necessary we implement dynamic routing protocols like BGP for high availability or OSPF for MPLS across the WAN interfaces of the security gateways. Threat modules should be enabled on the security gateways to perform network intrusion prevention and malware prevention. All site-to-site and client based VPNs should terminate at the security appliances, too.

Policy switches should connect to the security gateways inside interfaces as much as possible with as few other switch hops as possible. The switches should be configured in layer 2 mode only, and all layer 3 VLANs should terminate at the security gateways. This allows traffic to be routed from the access switches up to the security gateways so that security policy is applied to as much internal traffic as possible. If the number of access switches required outnumbers the ports on the security gateway, then distribution switches must be introduced to aggregate the physical access switch uplinks, as depicted in the diagram above. The distribution switches will then have trunks connecting to the security gateways.

With a next generation security platform, access control and security change dramatically. For example, imagine the simple scenario of IT engineers needing access to the servers to keep things running. If there are only VLANs between the LAN network and the server network, then there is no real room for access control. Anyone can move between these two networks as long as they have credentials.

However, if there were a firewall between these networks, a policy would need to be implemented. Each engineer upon joining the organization will need to request a static IP address, which takes time. From there they request access into the server network from that static IP address. The firewall team will update the policy with the new users source IP address and the destinations will likely be a long list of IP addresses and TCP and UDP services. The challenges here are that anyone can take that static IP and assign it to their computer. It also means that the administrator is restricted to a certain physical location (wherever their endpoint is located) when they access the server. Finally as people leave the organization the policy is never updated and becomes unruly.

In this same environment a much simpler and secure policy could be enabled. The source of the management traffic could be looking at the Active Directory user group for the IT engineers rather than (or in addition to) a static IP address. As soon as a new engineer is added or removed from Active Directory their access across the network is also added or removed. Rather than using ports and protocols that can be abused by malicious actors, the actual applications required (RDP, SSH) can be allowed and everything else will be blocked. And finally, by enabling network intrusion prevention and anti-malware, any malicious behavior can be prevented.

In summary, these are the benefits to this design:

- Capital cost savings - We have eliminated at least three layers of physical appliances. While the cost of individual security gateways may increase due to their larger capacity, there is still cost savings of approximately 50 percent on overall equipment costs due to the reduction in hardware.

- Operational cost savings - The complex routing and filtering is now being done on a single security gateway (or HA pair). Most daily modifications and troubleshooting will occur on a single pair of devices. The switches can all be in simple layer 2 mode. This means fewer devices need to be examined when there’s a problem, which saves time. Because the operational team will be spending most of their time in the security appliances, they will quickly develop stronger security skills which will also reduce the time taken to make repairs. There will be additional operations savings as less rack space, power, and cooling are required.

- Better security - The more traffic that we route on an internal core network, the less traffic will be visible to the security gateways. By putting the default gateway for all networks on the security gateway, traffic between those networks will be scrutinized.

With the increase in the use of virtual machines in modern datacenters, it is important to ensure that the security platform you select can be deployed in virtual and public cloud environments to provide continuity.

Complexity and obscurity are the real enemies of security and availability. Simplicity and efficiency are key allies.