How Does EDR Leverage Machine Learning?

Machine learning is a subset of artificial intelligence (AI) that involves training algorithms to recognize patterns and make data-based decisions. EDR leverages machine learning to improve its ability to detect, analyze, and respond to threats in real time, making it a critical component of modern cybersecurity strategies. In the context of EDR, machine learning enhances the capabilities of threat detection and response by:

- Behavioral Analysis: Machine learning algorithms analyze the behavior of applications and processes on endpoints to detect anomalies that may indicate malicious activity.

- Threat Intelligence: Machine learning models continuously learn from new data to improve their understanding of known and emerging threats, enhancing threat detection accuracy.

- Predictive Analytics: Machine learning can predict potential threats based on historical data and patterns, allowing proactive threat mitigation.

- Automated Response: Machine learning enables automated responses to identified threats, reducing the time to mitigate and remediate security incidents.

How EDR and ML Work Together

In today's rapidly evolving cybersecurity landscape, Endpoint Detection and Response (EDR) systems increasingly integrate machine learning to enhance their threat detection and response capabilities.

By leveraging machine learning, EDR systems can analyze vast amounts of data in real time, identify complex patterns and anomalies, and respond to threats with unprecedented speed and accuracy.

This powerful combination enables organizations to proactively defend against sophisticated cyber threats, reduce false positives, and continuously adapt to new and emerging attack vectors. EDR and machine learning create a dynamic, intelligent defense strategy that fortifies endpoint security and ensures robust protection against advanced cyber threats.

Data Collection in EDR Systems

EDR continuously collects vast amounts of data from endpoints, including system logs, running processes, network activities, file modifications, and user behaviors. This data provides a comprehensive view of the endpoint's state and activities, essential for identifying and responding to threats.

Machine learning utilizes the collected data to train models and algorithms. The extensive dataset helps machine learning systems learn normal and abnormal patterns, enabling them to identify potential security threats accurately.

Threat Detection with EDR and Machine Learning

EDR utilizes predefined rules and signatures to detect known threats. These rules are based on previously identified attack patterns and behaviors, providing a foundational layer of security.

Machine learning enhances threat detection by identifying anomalies and patterns that deviate from normal behavior, even if they don't match known signatures. This capability is crucial for detecting new, unknown threats (zero-day threats) that traditional signature-based methods might miss.

Behavioral Analysis for Enhanced Security

EDR monitors the behavior of applications and processes on endpoints, looking for suspicious activities that could indicate a security breach.

Machine learning analyzes these behaviors in real time, using historical data to differentiate between benign and malicious activities. It can detect subtle changes in behavior that may indicate an advanced persistent threat (APT), providing an additional layer of security.

Predictive Analytics in EDR

EDR primarily focuses on responding to threats as they occur, providing real-time protection against ongoing attacks.

Machine learning introduces predictive analytics by identifying potential threats based on patterns and trends in historical data. This predictive capability allows organizations to take proactive measures, reducing the risk of future attacks and improving overall security posture.

Automated Response with Machine Learning

EDR can be configured to respond to detected threats with predefined actions, such as isolating an affected endpoint or terminating a malicious process.

Machine learning enhances automated responses by continuously learning from each incident. This feedback loop helps refine response strategies, making them more effective. Machine learning models can adapt to new threats, ensuring that automated responses remain relevant and efficient.

Forensic Analysis Enhanced by Machine Learning

EDR provides detailed forensic analysis to understand the scope and impact of an attack, helping security teams investigate and respond effectively.

Machine learning enhances forensic capabilities by identifying connections and correlations between events and activities. This more profound insight into the attack's origin and behavior allows for more thorough investigations and better-informed responses.

How EDR Leverages Machine Learning

Anomaly Detection with Machine Learning

Machine learning models in EDR systems are trained to recognize normal behavior on endpoints. When deviations from this norm occur, the system flags them as potential threats. This method is particularly effective for detecting previously unknown threats, providing an additional layer of security beyond traditional signature-based detection.

Pattern Recognition and Threat Detection

Machine learning excels at recognizing complex patterns in large datasets. EDR leverages this capability to identify patterns associated with malicious activities that traditional rule-based systems might miss. This enhanced pattern recognition improves the accuracy and efficiency of threat detection.

Integrating Threat Intelligence

Machine learning integrates threat intelligence feeds, learning from global threat data to stay updated on the latest attack vectors and techniques. This continuous learning process ensures that EDR systems can detect new and evolving threats, keeping the organization's defenses current and robust.

Reducing False Positives with Machine Learning

One of the challenges in threat detection is the high number of false positives. Machine learning helps EDR systems reduce false positives by accurately distinguishing between legitimate and malicious activities based on historical data and behavioral analysis. This reduction in false positives allows security teams to focus on genuine threats, improving overall efficiency.

Real-Time Processing for Immediate Threat Response

Machine learning models process data in real time, allowing EDR systems to instantly detect and respond to threats. This real-time capability is crucial for minimizing the impact of attacks and preventing lateral movement within the network. Immediate threat response ensures that potential breaches are contained and mitigated swiftly.

Adaptive Learning for Evolving Threats

Machine learning models continuously learn from new data, adapting to changing environments and evolving threats. This adaptive learning ensures that EDR systems remain effective, even as attackers develop new techniques. The continuous improvement of machine learning models keeps the organization's defenses robust and up-to-date.

Workflow Example of EDR and Machine Learning Integration

By leveraging machine learning, EDR systems become more intelligent, adaptive, and capable of handling sophisticated and evolving cyber threats, providing a robust defense mechanism for organizations. The integration of machine learning enhances the overall effectiveness of EDR, ensuring comprehensive and proactive cybersecurity.

Data Ingestion and Baseline Establishment

- EDR collects data from endpoints, including logs, processes, and user behaviors.

- Machine Learning models process and analyze this data to establish a baseline of normal behavior, creating a reference point for detecting anomalies.

Continuous Monitoring for Anomaly Detection

- EDR monitors endpoints for deviations from the established baseline.

- Machine Learning algorithms analyze real-time data to detect anomalies, identifying potential threats that deviate from normal patterns.

Threat Detection and Analysis

- When an anomaly is detected, EDR flags it for further analysis.

- Machine Learning models assess the anomaly, determining its likelihood of being a threat based on learned patterns and historical data. This assessment helps prioritize and categorize potential threats.

Automated Response and Continuous Improvement

- If a threat is confirmed, EDR can initiate automated responses such as isolating the affected endpoint, terminating malicious processes, and notifying security teams.

- Machine Learning helps refine these responses by learning from each incident, improving the accuracy and effectiveness of future responses. This continuous improvement ensures that EDR systems adapt to new threats.

The Future of EDR: Predictions and Emerging Trends

AI has become a common buzzword in today’s technological landscape. AI-driven security solutions allow EDR systems to continuously learn from attackers and threats while developing strategies to combat them.

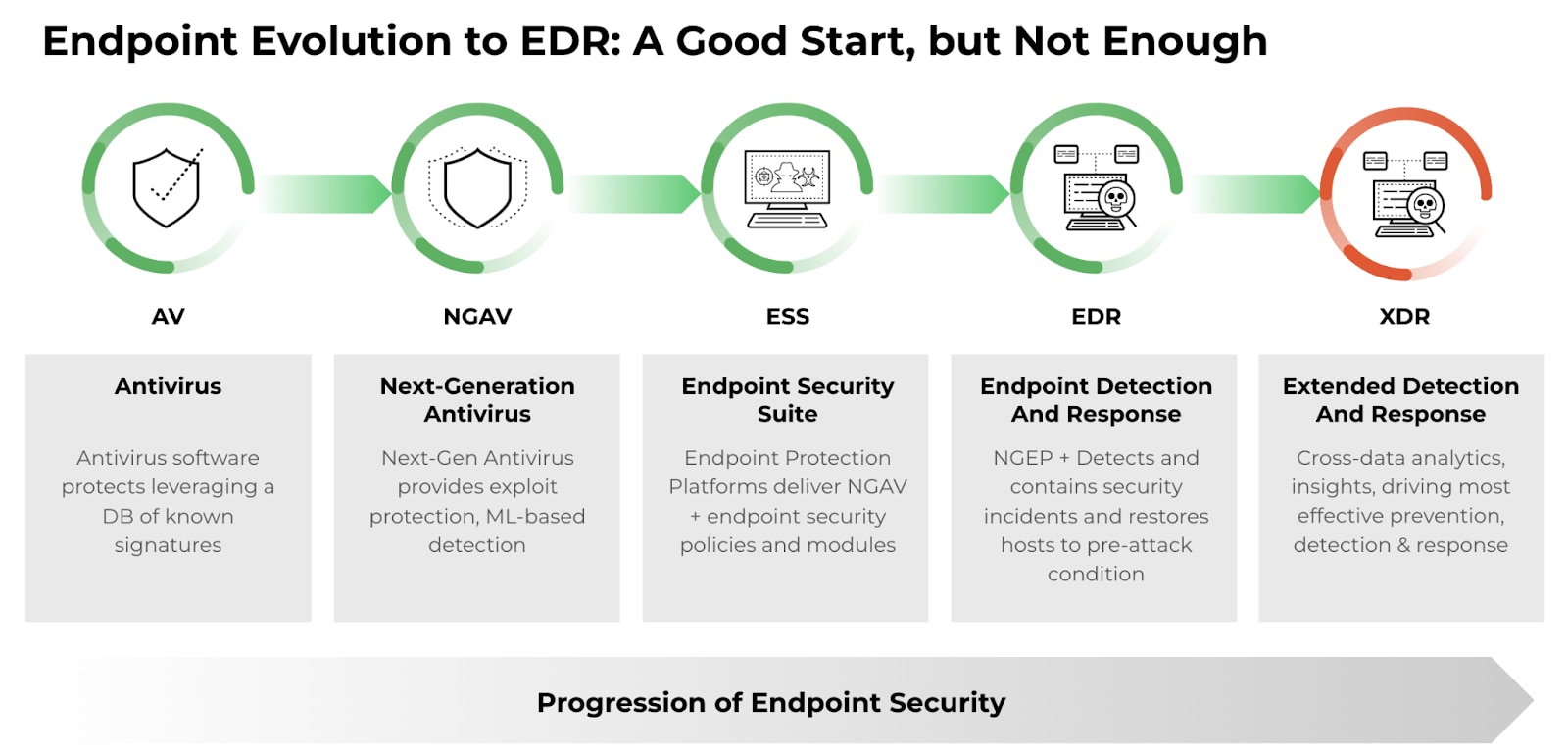

Today’s enterprises, however, require comprehensive security coverage across multiple environments, enhanced threat detection through data correlation, and streamlined security operations offered by a new, revolutionary solution: Extended Detection and Response (XDR).

XDR integrates data from multiple security layers to enable better detection of sophisticated threats, leveraging machine learning and analytics. It provides a unified platform for managing and analyzing security data, improving efficiency and response times for security teams. Additionally, it helps analysts identify hidden threats by analyzing behavioral anomalies across endpoints, networks, and cloud services.

Discover a new approach to threat detection and response that provides holistic protection against cyberattacks: What is XDR?

Organizations must try to stay ahead of attackers in the cybersecurity landscape. Attackers constantly develop new forms of malicious programs and probe defenses to see what works. To keep up with these threats, security technology must continue to evolve, as EDR has evolved into XDR.

How EDR Leverages Machine Learning FAQs

Key considerations include:

- Integration with existing infrastructure: Ensuring the EDR solution integrates seamlessly with current IT and security systems.

- Ease of use and management: The solution should be user-friendly and manageable with available resources.

- Detection and response capabilities: Evaluating the effectiveness of the EDR's threat detection, analysis, and response features.

- Scalability and performance: The ability to handle the organization's size and complexity without performance degradation.

- Support and updates: Availability of vendor support, regular updates, and access to threat intelligence to keep the solution current with evolving threats.

The performance of a machine learning model is evaluated using various metrics depending on the type of problem. Common metrics include:

- Accuracy: The proportion of correctly classified instances out of the total instances.

- Precision, Recall, and F1 Score: Metrics used in classification tasks to evaluate the relevance of the results.

- Mean Squared Error (MSE): Used in regression tasks to measure the average squared difference between predicted and actual values.

- AUC-ROC: The area under the receiver operating characteristic curve is used to measure the ability of a classifier to distinguish between classes.

Common challenges include:

- Data Quality: Ensuring that the data used for training is clean, accurate, and representative.

- Overfitting and Underfitting: Balancing the complexity of the model to avoid overfitting (the model too closely fits the training data) and underfitting (the model is too simple to capture the underlying patterns).

- Scalability: Handling large volumes of data efficiently.

- Bias and Fairness: Ensuring that models do not learn and perpetuate biases present in the training data.